Cloud Native: The Power of Standards, Kata and the OCI Runtime#

Go to the website of the Cloud Native Computing Foundation and you’ll be confronted with this picture of the cloud world:

Look closely and you’ll see the world is divided by function (e.g. Databases and Container Runtimes) and each category has several if not dozens of projects. In the cloud, as in technology broadly, there’s a lot of benefit to interoperability. That leads to standards. This post is about how standards from the Open Container Initiative (OCI) enable Kata Containers, a “secure container runtime with lightweight virtual machines that feel and perform like containers, but provide stronger workload isolation using hardware virtualization…” and how I tried to use Kata to sandbox privileged login servers.

Microk8s and its Components#

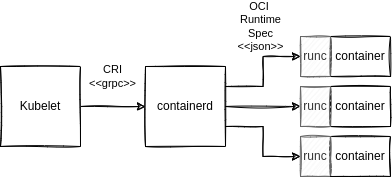

In the CIS Datacenter I use Microk8s, “a Zero-ops, pure-upstream Kubernetes from developer workstations to production.” Kubernetes is not a single service or daemon running on a machine, it’s a bunch of interconnected pieces. Microk8s installs everything in a single snap install command and is up an running on Ubuntu within a minute or two. It can be used to assemble a high availability cluster if you have three or more machines. The diagram is a simplified view of the components needed to run containers.

The kubelet is a Kubernetes component and is generic across implementations of Kubernetes. When you use the kubectl command you’re talking to the kubelet, which is keeping track of all of your YAML manifests and ensuring your Kubernetes cluster has reached the desired state. The kubelet doesn’t create containers, instead it communicates with Containerd using the Container Runtime Interface over Google’s Remote Procedure Call (gRPC). Any container runtime that implements the CRI can be used by Kubernetes. Containerd manages images and converts each pod specification into an OCI runtime specification which is just a JSON file. The runtime specification is read by runc which launches and manages the containers.

What if we wanted to run some other kind of container?

Kata#

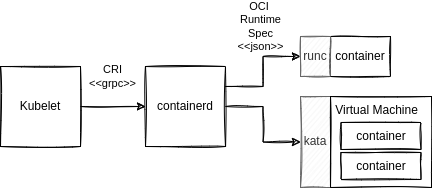

Kata implements the OCI Runtime Specification and does something amazing: It runs each pod inside of a lightweight virtual machine. With Kata if malicious code inside of a pod escapes the container it’s trapped in a VM without access to the Kubernetes node. Virtual machines provide the most effective and popular form of workload isolation. Here’s a diagram of a system running both normal and Kata containers:

Kata plugs in to Containerd and Kubernetes pods that have runtimeClassName specified like below will be run in a Kata container:

apiVersion: v1

kind: Pod

metadata:

name: nginx-kata

spec:

runtimeClassName: kata

containers:

- name: nginx

image: nginx

A pod is a lot like a VM. Containers in a pod have the same IP address and can share memory, devices and local storage. The majority of applications won’t notice the difference between a normal and a Kata pod. But, there are differences:

Privileged pods have privilege on the VM, not the node, so running an IDS in a Kata sandbox is pointless (by design).

Likewise

hostPathvolumes are volumes on the VM, not the node.It’s not possible to use the nvidia runtime or other kinds of hardware acceleration that’s not in the VM.

You can see the full list of Kata limitations in the documentation. Kata also consumes more overhead for each sandboxed pod because there’s a whole VM and separate OS to load. This has to be factored in to the pod’s resource requests or else starvation could happen. There are different strategies for doing that based on how your host implements cgroups. The host cgroup management describes what to do.

You can install Kata as an add-on for microk8s by running the commands:

$ microk8s enable community

$ microk8s enable community/kata

⚠️ Unlike other add-ons you have to run this on each node of the cluster because it installs the

kata-containerssnap.

But it doesn’t work (yet)…#

I tried to make this work on Sunday, July 30 2022. According to the documentation Kata had support for cgroups v2. But when I applied the YAML above my container never started because of this error:

Warning FailedCreatePodSandBox 1s (x24 over 46s) kubelet Failed to create pod sandbox: rpc error: code = NotFound desc = failed to create Containerd task: failed to create shim: Could not create the sandbox resource controller cgroups: cgroup mountpoint does not exist: not found

Support for cgroups v2 was only merged three days prior. You can see the pull request:

PR: runtime: Support for host cgroupv2 #4397

and the bug report:

Issue: Support for host cgroupv2 #3073

Users of Ubuntu 22.04 and recent Fedora releases will have to wait. Hopefully for not too long.